How to throttle http calls on the client using a DelegatingHandler

A common issue that you encounter when you need to consume an external api is when there is a rate limit for users of the service. The api will only allow x requests in a time period otherwise it will return a HTTP 429 Too Many Requests Error.

Generally for most use cases, the client will use the exponential backoff strategy meaning that it will wait a little longer for each 429 it gets. This is simple to do and for most use cases its sufficient.

What should you do if your high traffic service needs to get through as many requests as it possibly can in as short as possible time? One approach that I like is to use a DelegatingHandler to configure how many requests the client should try in a time period. a DelegatingHandler is middleware you can configure for each HttpClient request that can run code before and after your http call.

I created a small console app with source here to demonstrate how to put it all together.

In the app I created a DelegatingHandler called ThrottleRequestHandler which I can configure with a maximum requests per time period.

public class ThrottleRequestHandler : DelegatingHandler

{

private readonly int _maxRequests;

private readonly TimeSpan _period;

private readonly LinkedList<DateTime> _previousRequests = new LinkedList<DateTime>();

private readonly SemaphoreSlim _lock = new SemaphoreSlim(1, 1);

public ThrottleRequestHandler(int maxRequests, TimeSpan period)

{

_maxRequests = maxRequests;

_period = period;

}

protected override async Task<HttpResponseMessage> SendAsync(HttpRequestMessage request, CancellationToken cancellationToken)

{

try

{

await _lock.WaitAsync(cancellationToken);

if (_previousRequests.Count >= _maxRequests)

{

var timeSinceLast = DateTime.Now - _previousRequests.Last.Value;

if (timeSinceLast < _period)

{

await Task.Delay(_period - timeSinceLast, cancellationToken);

}

_previousRequests.RemoveLast();

}

_previousRequests.AddFirst(DateTime.Now);

return await base.SendAsync(request, cancellationToken);

}

finally

{

_lock.Release();

}

}

}

In this implementation I use a semaphore like a mutex so that I can use its async method to lock access to the method. The reason for this is that the LinkedList implementation isn’t thread safe and my logic really relies on one thread checking the previous requests at a time.

To use this, you just need to register it against a named HttpClient on startup.

public static async Task Main(string[] args)

{

await CreateHostBuilder(args).Build().RunAsync();

}

public static IHostBuilder CreateHostBuilder(string[] args) =>

Host.CreateDefaultBuilder(args)

.ConfigureServices((hostContext, services) =>

{

services.AddHttpClient("google", c=> {

c.BaseAddress = new Uri("https://www.google.com/search");

})

.AddHttpMessageHandler(c=> new ThrottleRequestHandler(maxRequests: 2, period: TimeSpan.FromSeconds(10)));

services.AddHostedService<GoogleSearcher>();

});

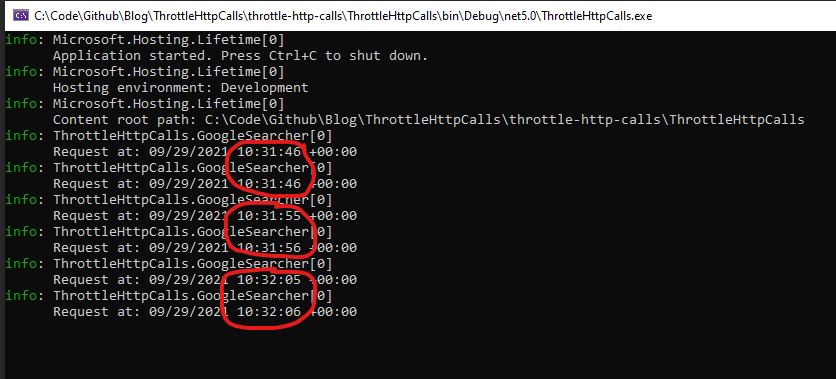

To demonstrate it working I configured it to allow only 2 requests every 10 seconds.

Then my worker just calls the end point on a loop but is throttled by my middleware.

public class GoogleSearcher : BackgroundService

{

private readonly ILogger<GoogleSearcher> _logger;

private readonly HttpClient googleSearch;

public GoogleSearcher(ILogger<GoogleSearcher> logger, IHttpClientFactory clientFactory)

{

_logger = logger;

this.googleSearch = clientFactory.CreateClient("google");

}

protected override async Task ExecuteAsync(CancellationToken stoppingToken)

{

while (!stoppingToken.IsCancellationRequested)

{

var result = await googleSearch.GetStringAsync("?q=Who%20else%20doesn%27t%20want%20the%20Springboks%20to%20change%20their%20style%3F");

_logger.LogInformation("Request at: {time}", DateTimeOffset.Now.ToUniversalTime());

}

}

}

You can see below that it works!